I thought it would be useful to share my perspective on this topic and take a closer look at the technical possibilities offered by generative AI. But let’s start at the very beginning:

Named Entity Recognition (NER) has been around for a while, but it’s still relevant today. I recently had a client situation where the focus was on insurance documents, which contain, for example, policy numbers, insured parties, coverage amounts, claim dates, and types of coverage. All of these entities are expected to be extracted automatically. This is a typical task in NER – a technique in natural language processing that identifies and classifies key elements – so called “entities” – in unstructured text, such as names, dates, amounts, locations, and other relevant information.

It reminded me of the many ways NER can be applied. Whether it’s input management, analyzing financial reports, contracts, or general terms and conditions, handling legally relevant documents, supporting knowledge management, exploring social media, or even in medicine and life sciences – the possibilities are endless.

Entity extraction from lease contracts

Thinking back, I worked on a project analyzing leasing contracts in the context of IFRS 16. “The objective of IFRS 16 is to report information that (a) faithfully represents lease transactions and (b) provides a basis for users of financial statements to assess the amount, timing and uncertainty of cash flows arising from leases. To meet that objective, a lessee should recognize assets and liabilities arising from a lease” (link to source, November 17, 2025). IFRS16 is effective since 1 January 2019.

A lease is a contract that gives one party (the lessee) the right to use an asset owned by another (the lessor) for a period of time in exchange for consideration. If you’ve ever looked at such a contract, you know they’re not exactly tidy datasets. These documents are often long, highly unstructured, and packed with dense legal language. A single contract might stretch over dozens of pages, mixing clauses, and appendices.

You’ll typically find key terms scattered throughout the text: the names of the parties, the start and end dates, payment terms, renewal options, asset descriptions, and accounting provisions. But they’re rarely presented in a clean, table-like structure. Instead, important information might appear in the middle of paragraphs, legal definitions, or annexes – making it a real challenge for both humans and machines to extract meaning efficiently.

So the core entities in such a document are not just names, but roles with legal, accounting, and operational meaning. Some of them are:

| Entity type | Description | Semantic variability | Examples |

|---|---|---|---|

| Lessee | The entity that uses the asset and recognizes a right-of-use asset and lease liability. | High – can be a company, division, joint venture, or individual. Context may vary across leases. | “Horizon Consulting GmbH”, “Delta Innovations Ltd.”, “Brightwave Services Ltd.”, “John Doe” |

| Lessor | The entity that owns the asset and grants the right of use to a lessee in return for payments. | High – could be a bank, real estate company, or leasing provider; can itself be a sub-lessor. | “Prime Properties AG”, “TechLease Solutions PLC”, “AutoFleet Leasing Group” |

| Asset | The physical or intangible item being leased. | Very High – can range from real estate, vehicles, IT equipment, etc. | “Commercial warehouse”, “50 high-performance laptops”, “Corporate fleet of 25 hybrid vehicles” |

| Lease period | The duration for which the lease is enforceable. | Medium – includes optional renewals and termination rights | “10-year lease term”, “36 months”, “Contract valid until December 31, 2029” |

| Payment | The monetary obligations under the lease. | High – may involve fixed, variable, contingent, and residual payments. | “Monthly rent of 18,500 euro”, “Quarterly payment of 7,200 euro”, “Monthly payment of 12,900 euro” |

| Modification | Contract clauses changing terms. | High – semantically complex. | “Lease may be extended for an additional 5 years”, “Option to replace up to 10 devices after 18 months”, “Maintenance shifts after 2 years” |

| Address | The site of the leased asset. | Medium – geographic location | “45 Kingsbury Road, London”, “Suite 305, 300 Fairfield Street, Leeds LS11 2QR, UK”, “Unit 12, 87 Willow Park Drive, Manchester M14 3QS, UK” |

That’s exactly where Named Entity Recognition (NER) becomes valuable. By training models to detect and label key elements – such as lessee, lessor, lease term, payment amount, or asset type – we can start turning this unstructured text into structured, analyzable data. To achieve this, the focus that time was on classic NLP pipelines, machine learning (ML), and deep learning (DL) models, where specialized classifiers had to be trained with a significant amount of annotated data.

The objective of the following experiment is to explore how the entities mentioned above can be extracted from a leasing contract using a Small Language Model (SLM)-based approach. Ensuring that a SLM or Large Language Model (LLM) performs entity extraction correctly, requires several steps. The key aspect of the following approach is confidence filtering: When you ask a SLM or LLM to extract entities, it outputs text, not probabilities. Unlike fine-tuned classification models, it doesn’t say, “I’m 95% sure the lessor name is “ABC properties””. SLM or LLM entity scores are subjective, not like ML probabilities. So to build confidence filtering, you need to derive uncertainty indirectly. Another assumption is, if the language model extracts the same entities multiple times, it’s likely confident.

Methodology used

Here’s a structured approach to achieve this:

- Define a prompt template which contains

- clear instructions and guidance what the LLM should do

- entity definitions

- extraction rules

- a set of valid examples in the desired output format

- Run multiple generations to guarantee extraction stability

- Vary sampling parameters, like temperature, top-p, top-k etc.

- Prompt the model to output a confidence score alongside each extracted entity

- Aggregate results and create a final validation score

- Calculate how often the extracted entity value was found in a contract across all runs

- Calculate average confidence score across most often found entity value

- Calculate combined confidence score based on the percentage of the most identified entity value and its average confidence value divided by two

- Mark as “human review needed” if combined confidence value is below a defined threshold

For illustration I will just reference to one of the sample lease contracts (contract type real estate) I’ve used for my tests, which is available here (November 14, 2025). The contract has around 6.300 characters, without spaces. The entity values to be expected to be extracted via the language model are listed in the following table. Here you can see, its not just about key word extraction, its about semantically correct recognition of whole sentences or parts of it.

| Entity type | Value (Ground Truth) |

|---|---|

| Lessor | ABC Properties |

| Lessee | Silvia Mando |

| Asset | dwelling |

| LeasePeriod | one year, beginning July 1, 2012 and ending June 30, 2013 |

| Payment | $685 per month, due and payable monthly in advance on the 1st day of each month during the term of this agreement |

| Modification | Upon expiration, this Agreement shall become a month-to-month agreement AUTOMATICALLY, UNLESS either Tenants or Owners notify the other party in writing at least 30 days prior to expiration that they do not wish this Agreement to continue on any basis. |

| Address | 9876 Cherry Avenue, Apartment 426 |

Setup

In my setup, I focused to use SLMs, hosted locally on consumer-grade hardware (Apple MacBook Pro, M1 Max, 32 GB). To run those models on my local machine, I used Ollama. As model I’ve chosen Granite 4, IBMs latest foundation model family which is available open source on Hugging Face and which can also be hosted locally through Ollama models. For my experiments I used different models from this model family, like Granite-4.0-Micro (3B), Granite-4.0-H-Micro (3B) and some others. Granite-4.0-Micro is “a 3B dense model with a conventional attention-driven transformer architecture” whereas Granite-4.0-H-Micro is “a dense hybrid model with 3B parameters” (link to source, November 17, 2025)

Below is the prompt template I used for my experiments. It defines the model’s role as an “expert information extractor specializing in IFRS 16”. It also specifies the entities to focus on, outlines some extraction rules to follow, and provides three sample outputs: one for real estate, one for IT, and one for car leasing. For more details see the Granite Language Cookbook Prompt Engineering Guide.

def build_prompt(text: str) -> str:

return f"""

<|start_of_role|>system<|end_of_role|>

You are an expert information extractor specializing in IFRS 16 lease-contract analysis.

Your task is to extract specific entities from the input text and return a single valid JSON object.

You must only extract text spans EXACTLY as they appear in the given text. Do NOT change, modify, correct, translate, paraphrase, shorten, complete, or generate text.

Entity definitions:

- Lessee: The party that uses the asset and recognizes an IFRS-16 right-of-use asset and lease liability.

- Lessor: The party that owns the asset and grants the right of use.

- Asset: The underlying asset being leased.

- LeasePeriod: The enforceable term of the lease.

- Payment: Monetary obligations under the lease.

- Modification: Any contractual clause or description indicating changes to the lease terms.

- Address: The physical location of the leased asset.

Extraction rules:

1. Collect all values found for each entity. Store them in the "value" field.

2. If you find an entity value create a confidence score:

- Use 0.90–0.99 if extraction is highly certain.

- Use <0.90 if uncertain or ambiguous.

2. If no entity value is found:

- Set "value" to "no results"

- Set "confidence": 0.0

4. Do NOT infer entity values.

5. Do NOT invent or produce any entity value that is not literally present in the given text.

6. Entities may be spread across multiple parts of the text. Collect all values for this entity, preserve their original wording, and combine all occurrences in one text string.

7. Each entity MUST appear in the output JSON. Even if the value is "no results".

8. Output MUST be a valid JSON, with no explanations.

Example output 1:

{{

"Lessee": {{"value": "Horizon Consulting GmbH", "confidence": 0.95}},

"Lessor": {{"value": "Prime Properties AG", "confidence": 0.91}},

"Asset": {{"value": "Commercial warehouse", "confidence": 0.90}},

"LeasePeriod": {{"value": "10-year lease term", "confidence": 0.9}},

"Payment": {{"value": "Monthly rent of 18,500 euro", "confidence": 0.90}},

"Modification": {{"value": "Lease may be extended for an additional 5 years upon mutual agreement", "confidence": 0.93}},

"Address": {{"value": "45 Kingsbury Road, London", "confidence": 0.97}}

}}

Example output 2:

{{

"Lessee": {{"value": "Delta Innovations Ltd.", "confidence": 0.95}},

"Lessor": {{"value": "TechLease Solutions PLC", "confidence": 0.95}},

"Asset": {{"value": "50 high-performance laptops", "confidence": 0.91}},

"LeasePeriod": {{"value": "36 months", "confidence": 0.93}},

"Payment": {{"value": "Quarterly payment of 7,200 euro", "confidence": 0.94}},

"Modification": {{"value": "Option to replace up to 10 devices after 18 months", "confidence": 0.96}},

"Address": {{"value": "Suite 305, 300 Fairfield Street, Leeds LS11 2QR, UK", "confidence": 0.97}}

}}

Example output 3:

{{

"Lessee": {{"value": "Brightwave Services Ltd.", "confidence": 0.95}},

"Lessor": {{"value": "AutoFleet Leasing Group", "confidence": 0.97}},

"Asset": {{"value": "Corporate fleet of 25 hybrid vehicles", "confidence": 0.98}},

"LeasePeriod": {{"value": "Contract valid until December 31, 2029", "confidence": 0.93}},

"Payment": {{"value": "Monthly payment of 12,900 euro", "confidence": 0.97}},

"Modification": {{"value": "Maintenance responsibilities shift to lessee after the second year", "confidence": 0.96}},

"Address": {{"value": "Unit 12, 87 Willow Park Drive, Manchester M14 3QS, UK", "confidence": 0.99}}

}}

Final Instruction:

After reading the input text, extract the entities according to the rules above and output only the JSON object.

Input: "{text}"

<|end_of_text|>

"""

Evaluation

This is not a systematic academic evaluation. The goal is to present a methodology and demonstrate its feasibility, with only minimal evaluation involved. In the following, the focus is on a single document (contract type real estate) as an illustrative example as already mentioned above. A more detailed evaluation would require analyzing additional contracts (and contract types) and evaluation metrics such as recall, precision, and F1 for comparison. Because the results (especially confidence values) may vary slightly from run to run, I chose to focus on discussing the outcomes rather than listing tables of confidence scores.

To evaluate the quality of entity extraction across the different models, I conducted several experiments that varied primarily in the number of model runs, specifically for N = 3, 5, 7, 9, and 11. For all experiments, the temperature was set to a fixed value of 0.7.

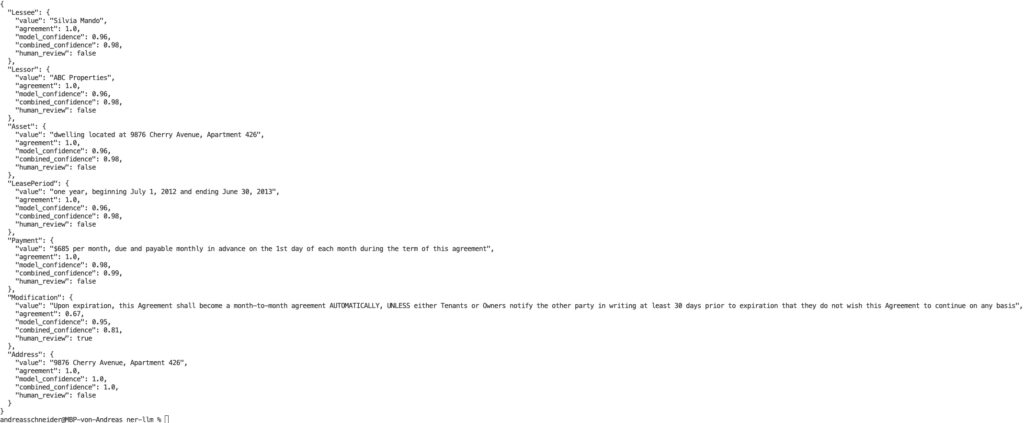

In each experiment, all seven entity types and there values were expected to be retrieved from the example lease contract. Each JSON result contains the entity, its value extracted from the text, whether human review is needed or not, and several confidence scores. Given the following example – 1.0 – 0.95 – 0.98 – those scores are

- 1.0: How often the extracted entity value was found in the text across all runs. In this case in each run the entity value was identified.

- 0.95: The average confidence score across the most often found entity value. This score is given by the model itself in each run (self-assessment).

- 0.98: Combined confidence score based on the percentage of the most identified entity value and its average confidence value divided by two. The threshold for human review was set to 0.90. This value depends on the use case and also on how critical the extraction of inaccurate entity values is.

In the following, I would like to discuss the results from the perspective of the seven entity types examined, focusing on my two personal favorite models for this specific entity extraction task: Granite-4.0-H-Micro (3B) and Granite-4.0-Micro (3B).

Granite-4.0-H-Micro (3B) – hybrid model

Overall, the model consistently extracted each entity correctly. In some cases, it regenerated the value instead of copying it directly from the text – despite the extraction rules defined in the prompt template – but the values themselves were still accurate. It reliably identified Lessor, Lessee, Address, and Asset, although sometimes it added minor extra information (e.g., appending the address to the Asset entity). The combined confidence scores for these entities were consistently between 0.95 and 0.99, well above the manual threshold of 0.90, meaning no human review was required.

For LeasePeriod, Payment, and Modification, Granite-4.0-H-Micro (3B) also produced correct results, though it occasionally reformulated the values instead of extracting them verbatim. For example, it generated “one year from July 1, 2012 to June 30, 2013” instead of the original “one year, beginning July 1, 2012 and ending June 30, 2013.” Similarly, for Modification, it produced “Automatic transition to a month-to-month agreement upon expiration unless either party notifies the other at least 30 days beforehand,” which is a simplified version of the source text (see screenshot below).

Because the model paraphrased these values – while still keeping them semantically correct – the confidence scores dropped. Even if the meaning was accurate, the phrasing differed slightly across runs, leading to less consistent string matches triggering human review (see screenshot below for examples).

These results were achieved with N = 3 runs, which is strong in both performance and quality. Similar patterns occurred at N = 9 and N = 11, where most outputs remained correct but confidence scores dropped due to paraphrasing.

Granite-4.0-Micro (3B) – model with a conventional attention-driven transformer

The results obtained with Granite-4.0-Micro (3B) were comparable to those of Granite-4.0-H-Micro (3B). It also reliably identified Lessor, Lessee, Address, and Asset, although it occasionally added minor extra information to the Asset entity. What this model never did was generate text on its own. For this reason, the outputs were overall more reliable, as it always followed the corresponding instructions in the prompt template. This led – as shown in the screenshot below – to an almost 100% ground truth match (except for Asset, where the address was appended). Optimal results were achieved with N = 3. Only for the Modifications entity (see screenshot) human review is still required, due to a confidence score of 0.81, slightly below the threshold of 0.90.

Summary

Both models offered a good balance between the number of model runs (N = 3), execution time (the average across all three runs was between 20–30 seconds on my local machine), and answer quality. The results are particularly impressive considering that these models were not specifically trained for this domain (IFRS 16).

From my personal experience, however, Granite-4.0-Micro proved to be more reliable, as it never regenerated text on its own. Given the limited number of runs and the high extraction quality, the cost-benefit perspective looks favorable for both models, even though the prompt template contains a significant amount of descriptive text, which increases input token usage.

Furthermore, this approach allows for additional parameters to be varied, such as temperature, top-p, top-k, or incorporating additional models (even within a single run for cross-model agreement).

The experiments also demonstrated that it is possible to ensure stable extraction of entity values. To further improve the accuracy of extraction for entity types with higher variability, such as LeasePeriod, Payment, and Modification, targeted fine-tuning could be considered.

A limitation of my experiments is the restricted ability to generalize across a wide range of lease contracts and contract types, as reflected in the examples provided in the prompt template. This experiment is intended more as an indicator of practical applicability rather than a systematic, scientific evaluation. The focus was on demonstrating the approach presented at the beginning and its general feasibility.

Conclusion

The two models mentioned did a good job extracting complex semantic entities from a lease contract without any domain-specific fine-tuning – simply through few-shot prompting. This really shows how capable SLMs can be, while offering some big advantages over larger frontier models: they’re cheaper, run smoothly on standard consumer hardware, and can be hosted entirely on-premises, keeping your data secure.

Focusing on a language model approach doesn’t mean existing solutions need to be rebuilt. A hybrid approach is often the most effective: continue relying on established NER models and deterministic or rule-based workflows, and introduce SLMs (or LLMs) where semantic variability is high. While extraction results from language models may vary between runs, they excel at capturing complex semantic entities – tasks that would otherwise require training new models from scratch. For this reason, they should be viewed as a complementary addition rather than a replacement, enhancing existing ML, DL, and rule-based components while integrating smoothly into current business processes.

💬 Comments or suggestions? I’d love to hear from you! You can leave a comment on LinkedIn, send me a direct message or connect with me, or use the contact form on my blog. I look forward to hearing your story!

💡Liked what you read? Subscribe to my blog and get new posts delivered straight to your inbox – so you never miss what’s next.