It feels like we’re traveling in a rocket while we’re still building it.

A quote from Miriam Kugler at the KPMG Future Summit 2024, given in response to the question of where she sees the future of work with Artificial Intelligence in a year and a half. And in my view – over a year and a half ago – that sentence is still highly relevant when we look at the rapid pace of developments in this field. And the technological spotlight has shifted, moving from Generative AI toward AI agents and agentic AI.

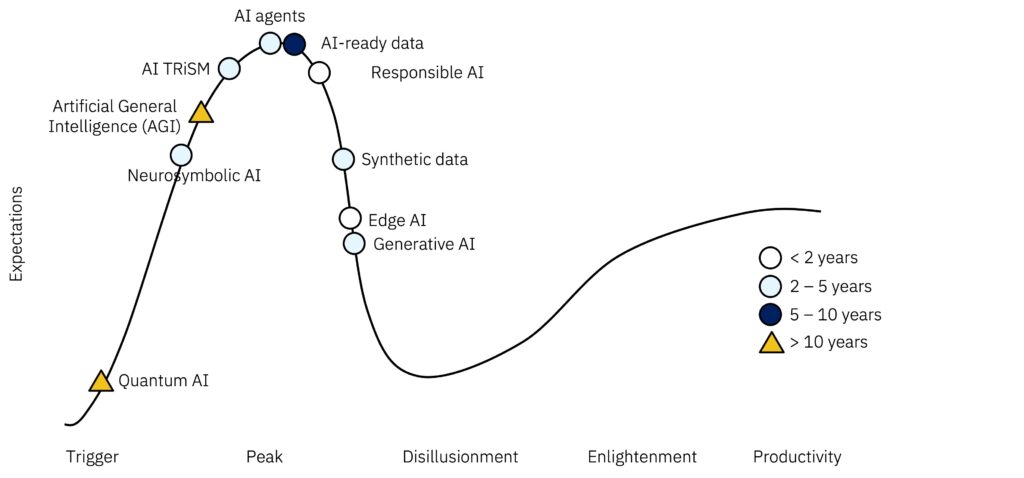

According to the 2025 Gartner Hype Cycle for Artificial Intelligence, Generative AI is on its way into the trough of disillusionment, which means that the technology is not meeting all expectations, and investment and attention are declining. AI agents, on the other hand, are at the peak of hype, where rapid breakthroughs are expected and, in addition to a few successful projects, there are also many failed ones. In the Gartner Hype Cycle, “expectations” do not directly represent a measurable number, but rather the level of attention given to a technology. How strongly do companies, investors, the media, or the public believe in the benefits of the technology? It is a measure of perception, enthusiasm, and trust, not just technical performance.

Agents’ core capabilities

But what exactly is behind this much-discussed topic, and what potential can it unlock? From a broader perspective, agentic AI does not replace machine learning, deep learning, AI assistants, or even generative AI — it builds on them. So what sets it apart from previous approaches?

AI agents have tools at their disposal, which gives them the ability to retrieve additional information from the internet, or access data from enterprise backend systems. To use these tools effectively, an AI agent needs a (large) language model with so-called “agent-like” capabilities. Those can generally be grouped into four categories.

- Perceive: The AI agent typically uses tools to gather information from its connected environment. For example, by interacting with enterprise backend systems, the agent can access knowledge that goes beyond the language model’s training data.

- Reason: This refers to the agent’s ability to analyze information, plan actions, and make decisions. It also includes the capacity to “remember” information and refer back to it when needed — a capability commonly described as memory.

- Act: Agents are able to execute tasks across systems, workflows, or tools. In this context, the ReAct (Reasoning & Act) framework is often referenced to make an agent “think”.

- Learn & adapt: To improve, agents leverage feedback and experience from users, enabling them to optimize, and learn over time.

Agent-based loan processing

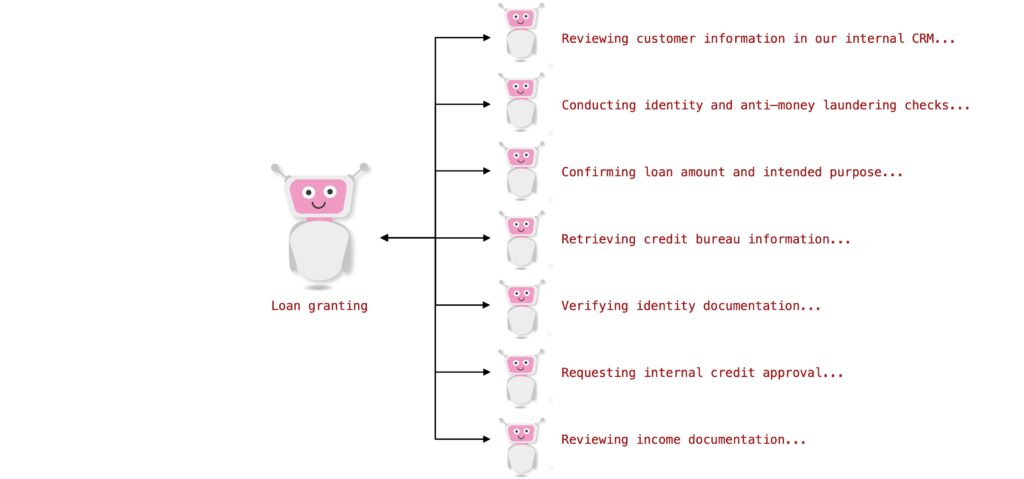

With this understanding of how agents work, we can now move from theory to practice. The following provides an illustrative outline of an agent-based application for a consumer loan. In order to approve a consumer loan, the AI agent must be instructed accordingly. This can be done via a prompt. The prompt allows to control and influence the behavior of the AI agent to a certain extent by telling which role the agent has, which tools are available, how to react to user queries and more. In this case, the agent must go through several process steps before a consumer loan can be approved.

And how might that look in practice?

The example shows a multi-agent solution and orchestration: What does that mean? Many specialized agents working together like a team of experts. They can also act independently of each other, but orderly interaction is required to complete the overall business process. A higher-level AI agent — the orchestrator — controls the communication. It plans the individual steps, evaluates them, and critically questions the results, re-iterating any step if necessary. In multi-agent systems, each AI agent performs a specific subtask that is necessary to achieve the overall goal as visualized below.

Reliability and autonomy

But when should you entrust a language model with control over a business process, and when should you take control yourself? One is navigating a complex landscape here – from deterministic processes and fixed rules to complete autonomy. Deterministic processes, rules, and clearly defined process steps offer less room for flexibility, but greater reliability. Process steps can be repeated and executed in the same way with consistent quality. That means, the sequence of tasks is determined at design time by the developer. On the other hand, AI agents: high flexibility, but less reliability in terms of repeatability due to greater variability. In other words, its probabilistic which means the sequence of tasks is determined at runtime by the agent and user.

Ultimately, when designing an agentic solution, the key questions are: How much autonomy can or do I want to allow myself in the execution of a business process, and how critical is this process? For example, there are rules that are easy to implement, such as checking the validity of an ID card as part of an identity check. Or comparing a Schufa (a German private credit bureau) score with a defined scale. There are also deterministic processes, such as the credit approval process itself, which requires mandatory verification steps that do not allow for any deviations. So, fixed rules and processes offer less flexibility, but a high degree of reliability in execution. In other words, the implementation of AI agents is not exclusively about the concept of autonomy, but rather about how existing rules, workflows, etc. can be enriched by agentic systems and generative AI – specifically in areas where variability is necessary and difficult to map using deterministic processes – but always at the cost of variance and reduced reliability. Companies have a wide variety of business processes, each with its own automation potential, and AI agents can support them to varying degrees.

Marina Danilevsky, Senior Research Scientist, Language Technologies, IBM said about AI agents in 2025 – Expectations vs. reality:

If something is true one time, that doesn’t mean it’s true all the time. Are there a few things that agents can do? Sure. Does that mean you can agentize any flow that pops into your head? No.

Another challenge in the enterprise context is being able to control and monitor the large number of agents. This is already evident in the example of the credit agent, who in turn relies on the money laundering agent, risk assessment agent, etc. Agents can also communicate with one another or make use of sub-agents. This can quickly become confusing. Therefore, cataloging and governance of agents is also an important aspect that should not be underestimated by enterprises.

Preparing for AI’s broader impact

Looking back at the 2025 Gartner Hype Cycle for AI, in addition to AI agents and generative AI, there are many other AI topics that are likely to affect companies over the next 2–10 years. Even today, it’s important not to focus solely on agents and agentic AI, but to take a broader view — many developments are expected to mature within the next 2–5 years, and enterprises should start factoring them into their mid- and long-term AI strategies. Not only from a technological perspective, but also from an organizational one. And this is just the AI view!

To close the loop on the rocket metaphor: as AI agents and technologies continue to evolve, the rocket you build today must safely navigate the current AI landscape while remaining ready for the many innovations yet to come — it needs to be secure, open, expandable, controllable, and provide space for everyone.

💬 Comments or suggestions? I’d love to hear from you! You can leave a comment on LinkedIn, send me a direct message or connect with me, or use the contact form on my blog. I look forward to hearing your story!

💡Liked what you read? Subscribe to my blog and get new posts delivered straight to your inbox – so you never miss what’s next.